I'm archiving this project simply because there is not a robust dataset out there to build this model with. The various attempts I made at taking real world terrain data, reversing the erosion process, and training on that only led to shortcut learning and/or bad results. While there are some generative approachs you could take (diffusion models), they aren't an approach I'm interested right now (I did investigate them in a branch on this repo.) Feel free to open an issue if you have a question. Just know that the code here and the concept itself is still incomplete.

This project aims to create a deep learning network that accelerates terrain modeling.

To get started training a model, create a virtual environment and install the dependencies:

python3 -m venv .venv

. .venv/bin/activate

pip3 install -r requirements.txtNext, you'll need to download a DEM dataset from USGS. You'll want one with a lot of good erosion-like features. For reference, TBDEMCB00225 from the CoNED TBDEM dataset is a good starting point:

Once you've got the ZIP file, extract it and place the TIFF file into data/TBDEMCB00225.

You can give the sub-folder a difference name if you'd like,

but the entity ID is a good way to ensure it is unique.

Now, you'll have to create a configuration file in order to describe how your model will be trained. An easy way to do this is to just run the bootstrap module:

python3 -m deepslope.bootstrapVS Code users: You can also just run the Bootstrap launch configuration.

Open up config.json and look for dems.

Add to this array the path of the TIFF file you extracted from the USGS download.

For example:

{

"dems": [

"data/TBDEMCB00225/Chesapeake_Topobathy_DEM_v1_148.TIF"

]

}The rest of the configuration fields can be left to their defaults.

In order to train the model, run:

python3 -m deepslope.optim.trainVS Code users: you can run the Train Net launch configuration.

At the end of each epoch, a test is done and saved to tmp in the repo directory. You can examine this files to monitor the training progress.

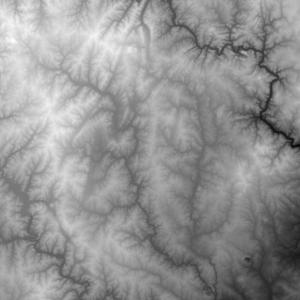

Here's a before and after render of the first prototype model. The input was made with simplex noise. This primarily is meant as a proof of concept, the model is still under design.